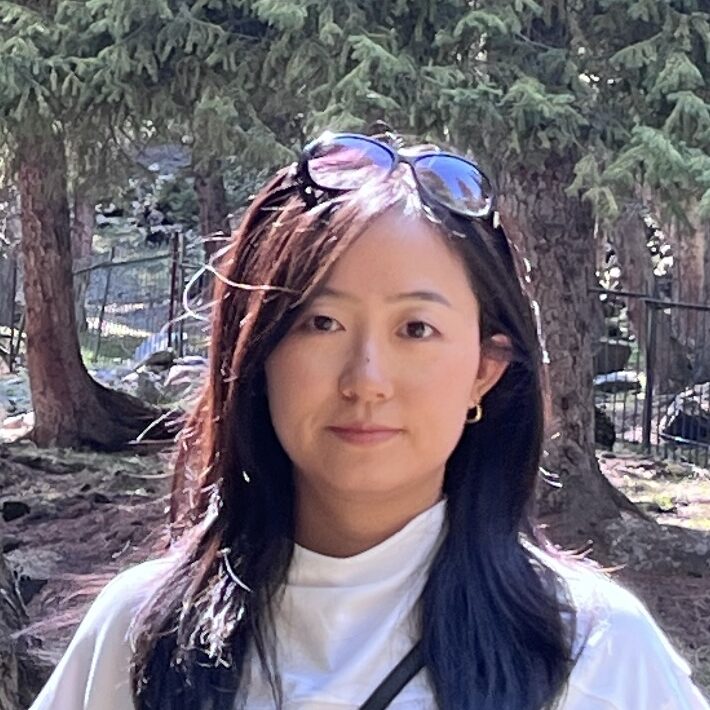

Iolanda Leite, Associate Professor, Division of Robotics, Perception and Learning at KTH and a member of the Working group Learn at Digital Futures shares insights on Human-centered Artificial Intelligence.

Hi there, Iolanda Leite, you are the PI of the research project Advanced Adaptive Intelligent Systems (AAIS) at Digital Futures.

What are the challenges behind this project?

– Intelligent systems are becoming ubiquitous in people’s lives, for example in the form of smart appliances, wearables, and, increasingly, robots. As these systems assist an ever wider range of users in their homes, workplaces or public spaces, a typical one-fits-all approach becomes insufficient. Instead, these systems will need to take advantage of the machine learning techniques upon which they are built to continually adapt to people and the shared environment they are operating in.

What is the purpose of this project?

– Our project can be seen as an example of Human-centered Artificial Intelligence. The aim is to develop adaptive social robots that can understand people’s communicative behaviour and task-related physical actions, and adapt their interaction accordingly. The main novel aspects of our project are: first the strong focus on user adaptation, which is a crucial aspect when dealing with real-world applications but has not been extensively investigated; and second involving several stakeholders, such as older adults and elderly care providers, in all steps of the development process.

How is the workgroup organized and who participates?

– The project has six PIs, five of them from two different KTH schools – EECS and CBH – and a PI from Stockholm University. We are an interdisciplinary team of engineers and social scientists, bringing expertise in both technical design and qualitative understanding of human interactions with and adoption of new technologies.

Mention some interesting findings/conclusions? Anything that surprised you?

– In the first year, before the pandemic, we have successfully explored older adults’ home cooking practices and opportunities for conversational embodied robots through observations and video data. One interesting finding was that in this particular group, people often perform actions out of sequence, which can result in disfluencies – for example, taking a tray out of the oven without making space for it in the counter in advance. If a robot could perceive these actions and anticipate possible disfluencies, it could reminder users in advance. We also conducted some laboratory studies on whether a robot’s embodiment affect people’s behavior and attitude towards the robots when they fail. We found that participants were more forgiving to human-like devices when they fail than less anthropomorphic devices such as smart speakers.

What is the next step? What would you like to see happen now?

– We are planning some fieldwork in elderly care homes to investigate how the technologies we have been developing can better support the elderly and staff in these environments. This part of the work has obviously suffered some delays due to the pandemic. In the past year we have been mostly making progress on developing the underlying adaptive technology that will enable robots to improve the health and well-being of the elderly, our target population. For example, my PhD student Youssef Mohamed who is funded by the project is working on an automatic system that can detect states like frustration and high cognitive load not only using computer vision and audio, but also less explored modalities such as thermal infrared imaging.

Read more and watch the video about the Advanced Adaptive Intelligent Systems (AAIS) project here