Abstract: Approximating and sampling from complex multivariate distributions is a fundamental problem in probabilistic machine learning (e.g., amortised Bayesian inference, conditional generation under priors, robust combinatorial optimisation) and in scientific applications (e.g., inverse imaging, molecular design, modelling of dynamical systems). This talk will be about my recent work on adapting diffusion probabilistic models — a class of neurally-parametrised processes that have recently been recognised as powerful distribution approximators — for the problem of efficient inference and sampling in high-dimensional spaces. Typical training of diffusion models requires samples from the target distribution.

However, when we have access to the target density only via an energy function or a prior-likelihood factorisation, training of diffusion models is more challenging. I will survey reinforcement learning (RL) methods that can be used to train diffusion models in a data-free manner, connecting them to entropy-regularised RL and stochastic control. I will then show how these methods can be applied to intractable sampling problems in a variety of domains: in vision (text-to-image generation with human feedback, imaging inverse problems), language (constrained generation in diffusion language models), and control/planning (policy extraction with a diffusion behaviour policy).

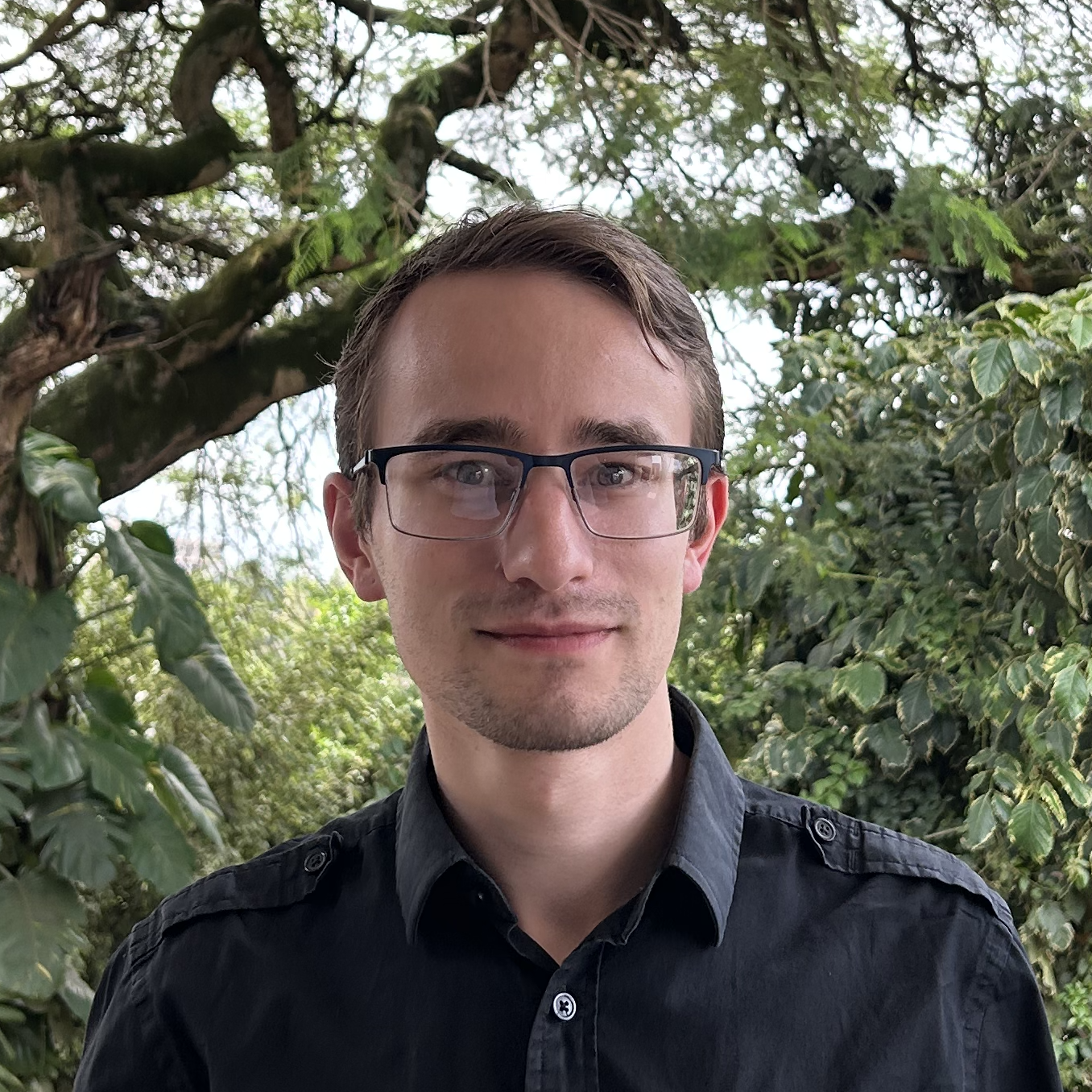

Bio: Nikolay Malkin (website) is a Chancellor’s Fellow (≈ Lecturer / Assistant Professor) at the School of Informatics, University of Edinburgh, with main research interests in induction of compositional structure in generative models, modelling of posteriors over high-dimensional explanatory variables, and Bayesian neurosymbolic methods for reasoning in language and formal systems. Nikolay holds a PhD in mathematics from Yale University (2021) and was a postdoctoral researcher at Mila — Québec AI Institute (Montreal) from 2021 to May 2024.

Date and time: 24 October 2024, 13:00-14:00 CEST

Speaker: Nikolay Malkin, University of Edinburgh

Title: Training diffusion models without data

Where: Digital Futures hub, Osquars Backe 5, floor 2 at KTH main campus OR Zoom

Directions: https://www.digitalfutures.kth.se/contact/how-to-get-here/

OR

Zoom: https://kth-se.zoom.us/j/69560887455

Host: Jens Lagergren

Watch the recorded presentation: