About the project

Objective

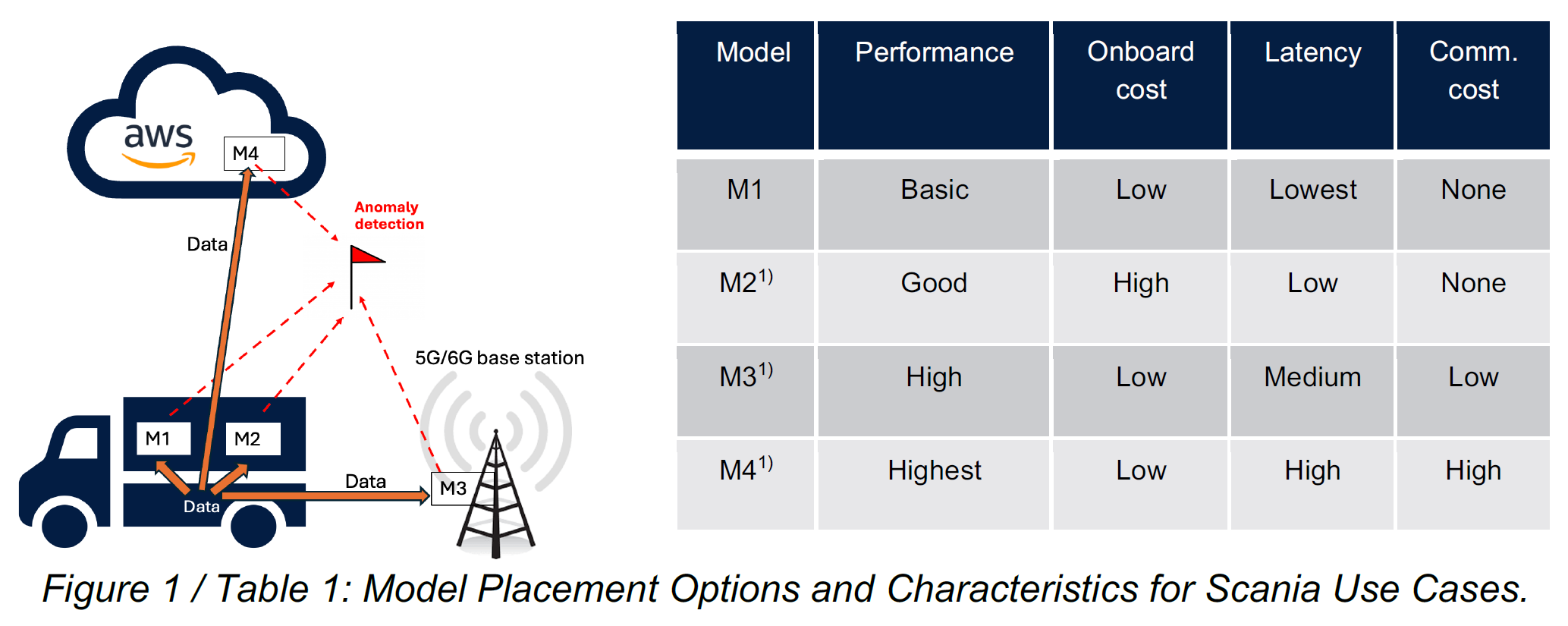

This project aims to develop and evaluate algorithms for dynamic inference offloading in Scania’s fleet, optimizing the trade-off between model accuracy, latency, and resource consumption. The proposed cascaded inference approach ensures that data is first processed by small models at the edge (M1). Depending on inference confidence, decisions are made in real-time on whether to offload tasks to more complex models (M2-M4) with higher accuracy but increased computational costs.

The core objectives include: (1) designing algorithms that improve inference accuracy while minimizing latency and bandwidth usage; (2) providing theoretical guarantees for the proposed methods, particularly in terms of regret minimization; and (3) benchmarking these algorithms using real-world data from Scania’s operational vehicles. The research will contribute to optimizing deep learning model deployment, ensuring scalable and efficient AI integration in industrial applications while maintaining cost-effectiveness and system reliability.

Background

Deep Learning (DL) models have become a standard for data-driven tasks such as classification and predictive analytics due to their high accuracy. However, their computational and memory demands often require cloud-based deployment, which introduces challenges like latency, bandwidth consumption, and security concerns. In response, edge computing has gained traction, enabling inference on resource-constrained Edge Devices (EDs) such as IoT sensors, mobile devices, and autonomous vehicles. While edge deployment reduces communication delays and enhances data privacy, small models often suffer from lower accuracy.

Scania, a global manufacturer of commercial vehicles, faces similar challenges in deploying DL models across its fleet for tasks such as autonomous driving, predictive maintenance, and sustainable operations. There exists a trade-off between accuracy and efficiency when placing models at different computation points. This project explores cascaded inference, where small models operate locally, and only complex cases are offloaded to more powerful computing resources, balancing accuracy with cost efficiency.

Partner Postdocs

This project brings together experts from multiple disciplines to address the challenges of deploying DL models efficiently across Scania’s fleet. Researchers from machine learning, optimization, embedded systems, and automotive engineering will collaborate to develop cascaded inference strategies that optimize accuracy, latency, and resource usage.

Scania’s senior data scientists, Dr. Sophia Zhang Pettersson and Dr. Kuo-Yun Liang, provide real-world insights into vehicle data, predictive maintenance, and cost modelling. Associate Prof. Lei Feng adds knowledge in Bayesian optimization and deep learning techniques for edge computing.

This collaboration ensures that theoretical advancements in machine learning align with practical deployment challenges in commercial vehicles. By integrating perspectives from academia and industry, the project fosters innovation in scalable AI solutions, leading to efficient, adaptive, and cost-effective DL deployment across connected fleets.

Supervisor

KTH researchers, led by Prof. James Gross, contribute expertise in hierarchical inference, algorithm development, and performance guarantees.